U.S. Follows U.K.'S Lead With Age Verification Laws On Adult Sites on OnlyLikeFans

New Age Verification Laws Hit U.S. States

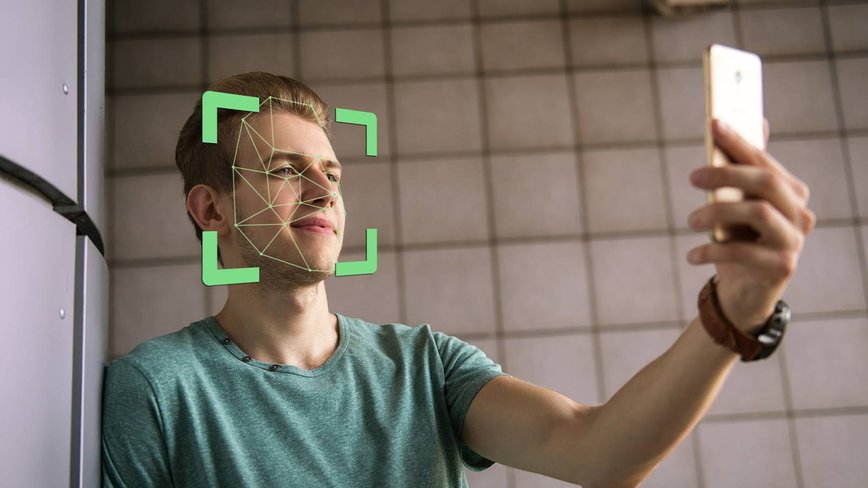

The U.K. set the stage in July with its Online Safety Act, demanding that adult websites authenticate users' ages through ID or facial recognition. Now, the U.S. is stepping into the spotlight with similar measures. According to Komu, states like Texas and Florida are joining over 25 others to enforce stringent age verification rules on adult platforms. Some websites, rather than investing heavily in ID verification services, have opted to block users from these states.

States Enforcing Age Checks

The wave of legislation spans numerous states. Alabama, Arkansas, Florida, Georgia, Idaho, Indiana, and Kentucky are among those implementing these laws. Missouri, the latest to join, announced its decision last week, with Attorney General Catherine Hanaway highlighting the importance of shielding minors from online pornography.

"This rule is a milestone in our effort to protect Missouri children from the devastating harms of online pornography," Hanaway emphasized.

Beyond Adult Websites: Wider Implications

The ripple effect of these laws isn't limited to explicit content sites. In the U.K., platforms like Discord, Reddit, and even Xbox have had to adjust to the Online Safety Act's requirements. Despite a massive petition critiquing the legislation as overly restrictive, the measures remain in place.

The U.S. could face similar challenges, with fears that the broad definition of 'adult' content might target video games, LGBTQ+ discussions, and even political content. Yet, much like the U.K.'s Supreme Court, the U.S. has dismissed these concerns, supporting the legislation.

Europe Joins the Movement

Age verification isn't just a U.K. and U.S. affair. Five EU nations—Denmark, Greece, Spain, France, and Italy—are preparing to trial an age verification app, aiming for full implementation by 2026. This global trend underscores a growing focus on online safety, albeit amid debates over its scope and impact.